At the moment I am writing this, my game is not yet approved; and it does not have ambient sound! Thus, I will imagine an ambience with some relevance to the game.

Since the game goes on in a relatively "creepy" environment, I have come up with a background in this kind of a sequence:

- The main character is running to find someone in the dark night; a tiny alley. Location: eastern Los Angeles!and

- The main character is under heavy influence of drugs. His tourturers have put him in a chamber. He is totally tripping!

This is my concept of a game like The Godfather (by Electronic Arts). I read about it last night actually! It sounds pretty cool.

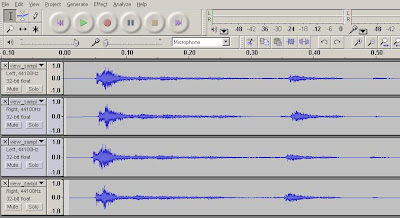

This is my concept of a game like The Godfather (by Electronic Arts). I read about it last night actually! It sounds pretty cool.DOWNLOAD THE 2-PART MP3.

References:

- Christian Haines. "Audio Arts: Semester 4, Weeks 10." Lecture presented at the EMU, University of Adelaide, South Australia, 15/10/2008.

- The Godfather, The Game. Wikipedia. (http://en.wikipedia.org/wiki/The_Godfather:_The_Game) [Accessed 18/10/08]

Back to the main issue, the "sound-and-feeling-testing"; the idea was apparently derived from the Indian perception and sacredness of sounds. I personally -as a materialist- am not sure if such definitions of sound and imagining "souls" for sounds would make sense or not but I admit that there definitely is "something" about the effects of sounds on us; probably music therapists know much more about this.

Back to the main issue, the "sound-and-feeling-testing"; the idea was apparently derived from the Indian perception and sacredness of sounds. I personally -as a materialist- am not sure if such definitions of sound and imagining "souls" for sounds would make sense or not but I admit that there definitely is "something" about the effects of sounds on us; probably music therapists know much more about this.

References:

References: